This is the annotated version of a presentation I gave at the

5th USENIX Workshop on Hot Topics in Security (HotSec '10). My slides tend to be designed to complement what I'm saying rather than as stand-alone pieces, so I'm writing out approximately what I said during my presentation so that you can get the whole sense of the presentation. I also make heavy use of creative commons content to put together my presentations: click through each image for attributions and more details about the photos used.

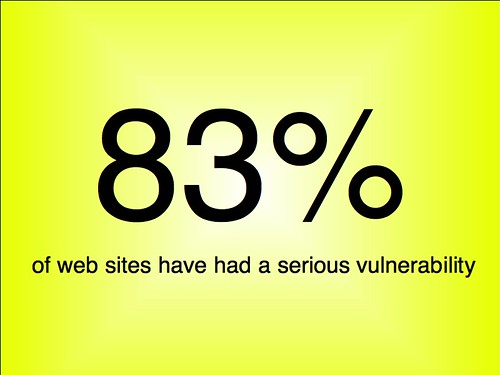

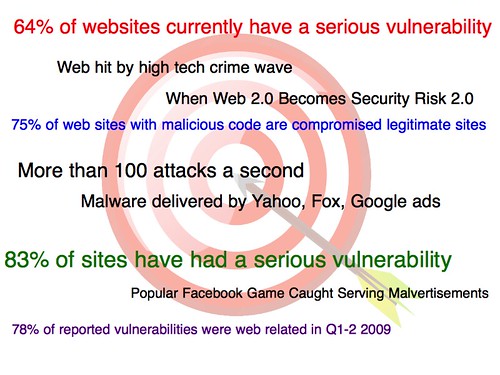

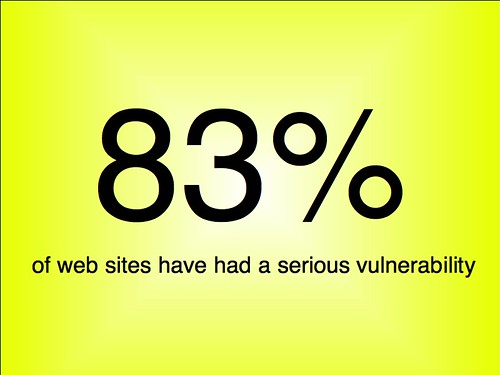

According to WhiteHat Security, 83% of web sites they looked at had a serious vulnerability at some point in their lifetimes.

They found that nearly two thirds of all websites had such a vulnerability right now.

So really, we should be asking ourselves... why?

What makes the web so difficult to secure?

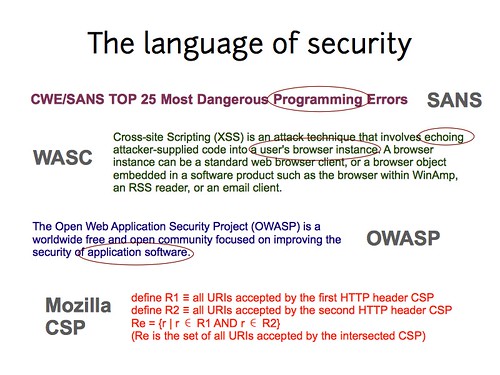

Unfortunately, that's not an easy question to answer. If you asked 20 web security experts, you might get 20 different answers...

From technologies to attackers to standards... there's a lot of little things that can go wrong and result in an insecure web page.

I don't have time to talk about all of them and I certainly don't know how to solve all of them, so I'm going to focus on one particular issue...

And that's that there are no restrictions within a web page.

So in the typical way of describing things, your browser makes a sandbox for your web page to play in.

So you put your cute little baby web page in there, and things are pretty good. But eventually, you get bored...

And you want to add some toys in. User comments, latest status updates, advertisements, pictures. There's a lot of toys available for your web page. And that's great...

... if your web page is filled with nothing but cute and cuddly things that like to play together. But even cute and cuddly things have accidents...

And not every bit of stuff that gets added to a web page is necessarily safe. It's quite easy to wind up with sharks in your sandbox.

We've actually got some great web security work out for mashups that deals with separation, so you can put all those potential sharks into separate tanks and keep other content safe.

So your web page becomes a bit more like an aquarium with lots of separate boxes or containers or fish tanks.

But even though we have known ways to add separation, web developers don't use it. And then you wind up with sharks pretty much everywhere...

(This actually isn't photoshopped; it's a real art installation.)

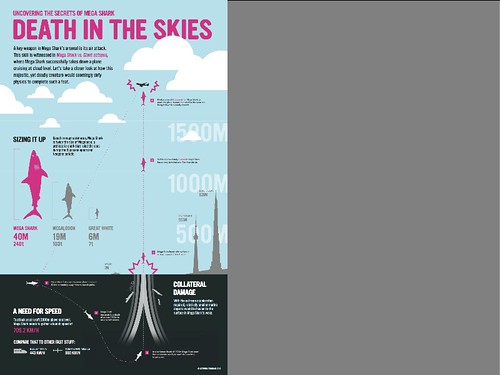

And if you're worried about sharks running in to houses, you should be especially worried about the menace that is MegaShark. If you've watched the trailers, you know that MegaShark is a giant shark capable of jumping out of the ocean into the air and taking out an airplane.

[pause]

But no, I'm not here to talk about MegaShark.

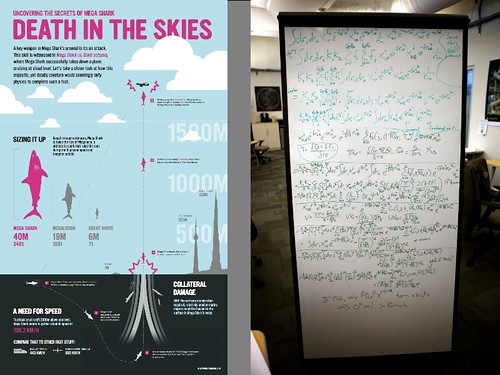

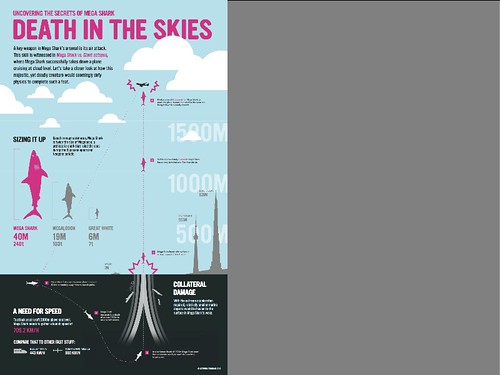

What I want you to see is that the picture I have up here is an infographic. That's a graphical way to represent data, usually statistics, used by magazines and other who want to convey complex data in a way that people can readily understand it.

So here you can see visually how much bigger MegaShark is than a great white or even a meglodon. The infographic shows you how fast MegaShark would have to be going, reminds you that a shark travelling that quickly would damage other nearby boats, and so on.

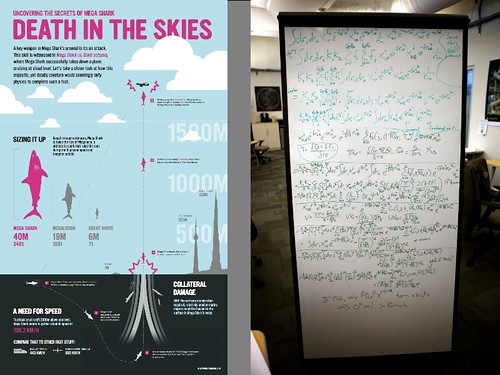

It's not the only way to represent the information. One could also use the equations that were used to calculate the speed of the shark. This lets you get a lot more detailed information, like the density of the water in the San Francisco Bay.

But you can only glean that information if you understand the equations. I have a math degree, and I can tell you that I certainly can't get that information at a glance: you need to know the symbols used, the physics, etc. It may provide great detailed information to experts, but for many people it will be impenetrable, and even for experts it's going to take a lot more time to analyze.

So that's two ways to represent information, one which is very good for quick explanation and memorable presentation, another which provides greater detail and precision.

But what does this have to do with web pages?

Well, the thing you should note is that the people who make web pages are often the same sort of people who make infographics. They're graphic designers, often with artistic backgrounds, and they like to work within the visual space, often to reach a wide audience.

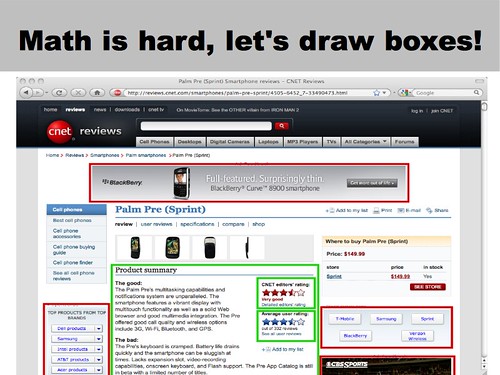

And that's the sort of thinking that inspired my work on visual security policy. Existing work allows extensive customization of policy, but it didn't really give a higher level, at-a-glance sort of way to deal with web page security.

Or to put it more flippantly... Math is hard, let's draw boxes.

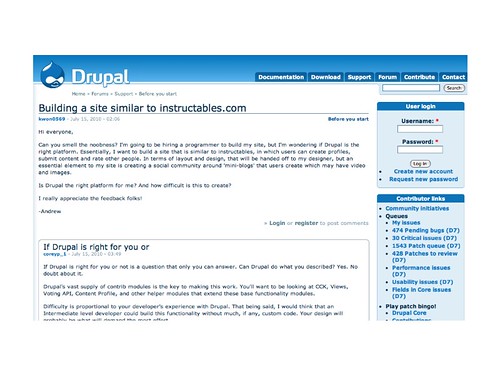

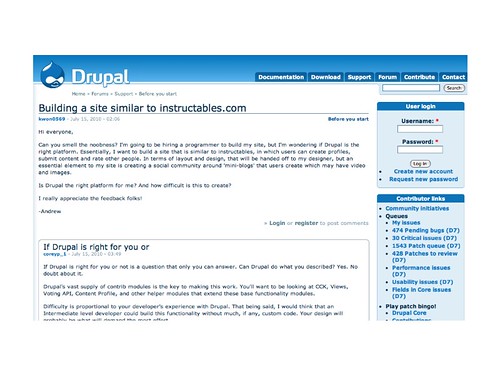

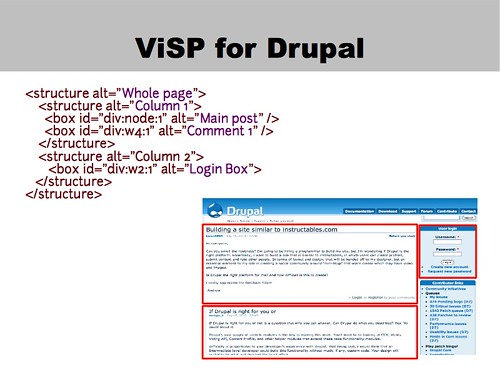

So here's an example. Let's say you're running a site with forums. This is the support forum for Drupal, a content management system. People post their questions, and other people can help them out with answers.

But what if one of those people answering wasn't interested in being helpful so much as gaining control over other users? Suppose this person was able to inject a little bit of code (I don't know of any vulnerabilities on Drupal right now, but and remember, with over 80% of sites vulnerable at some point in their lifetimes, it may just be a matter of waiting for many sites).

So here, let's suppose poster #2 has injected some code that changes the login box so that it sends usernames and passwords out to attacker.com.

That's about two lines of code, so it's easy enough to disguise and hide in a lengthy comment.

If we wanted to stop this using boxes, we'd probably take a look at the page and think “well, that's user-inserted content there and there... there could be sharks!” so you could put a box around each comment separately. And then we might realize that login box contains the username and password, so we should probably protect it too. Into a box it goes! That way if we missed a source of user content, it's still protected.

So if poster #2 goes and tries to attack the page, they get stopped in their own box, and they cannot change the login box, so nothing gets sent out to attacker.com.

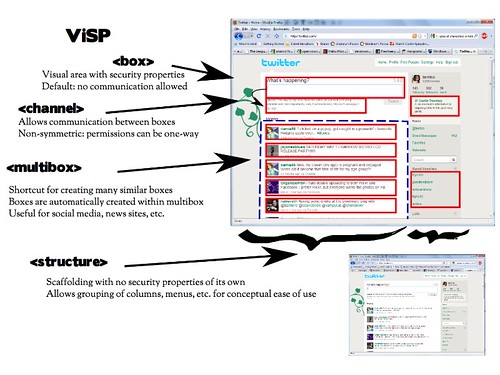

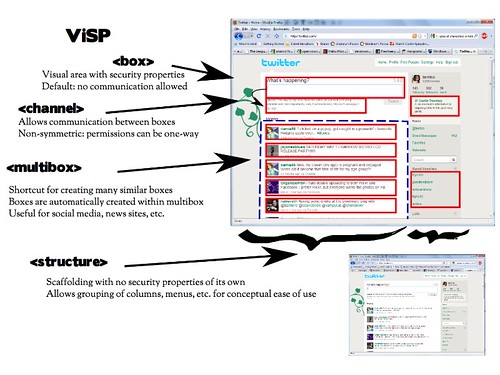

Visual Security Policy (or ViSP for short) has 4 components. The first as we saw in the example is a box: it's a visual area on screen that has an associate security policy.

The second is a channel, which allows communication between boxes. This can be one-way.

Then there's the multibox, which is a bit different in that it's more of a shortcut. There are many cases where there are a whole bunch of similar things on a page: lists of status updates, news stories, comments, etc. We might want to give them all similar security properties, and the multibox lets us do that. Also sometimes the “next” button may add things into the page instead of loading a new one, so the multibox makes sure you don't have to care if there's 5 things or 20 – they'll still be boxed up.

Finally there's structure which is the... invisible part of visual security policy. It lets you group things into columns, etc. even if the column itself shouldn't have any special security policy.

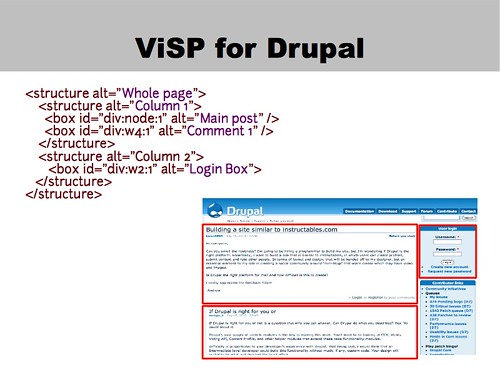

So here's what the ViSP would look like for our Drupal example. It's short xml, and you'll note that the id attribute can be used to show how ViSP can be associated with the underlying HTML.

But this is a relatively small example. What would ViSP look like on a larger site?

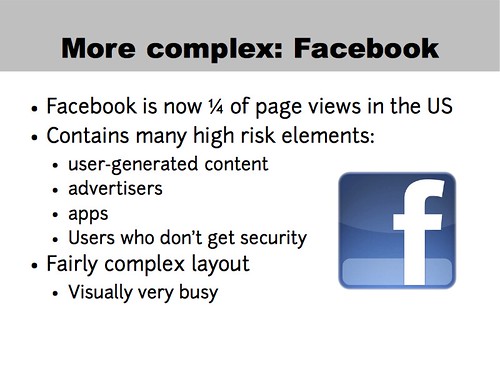

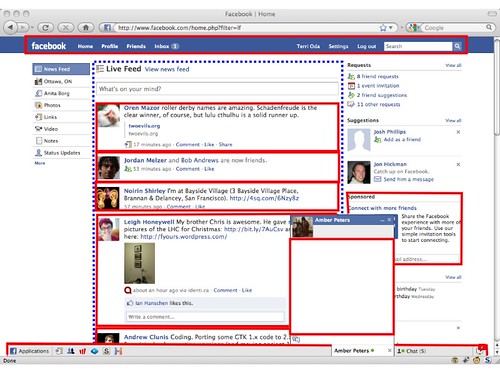

So let's look at Facebook. At ¼ of the page views in the US, you pretty much have to be able to handle Facebook if you want to claim you have a system that can do web security. While you might have to whitelist facebook itself, the elements of it will show up on other sites because that's what people expect.

And some of those are high-risk elements: user-generated content, advertiers, apps, and people who sometimes don't realise the risks they're taking. And of course, it's a fairly complex layout which could be an issue for a visual solution.

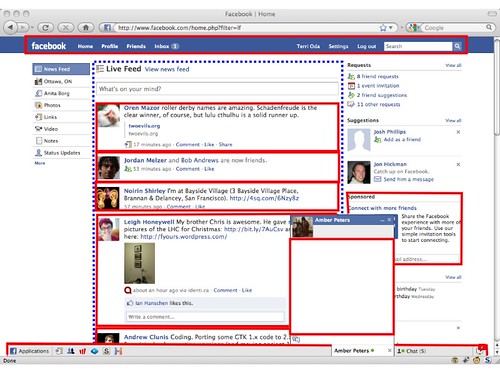

So here's what Facebook looked like a little while ago. They've since redesigned by many of the elements are still there, like the menu bars.

And here's what a visual security policy for Facebook might look like. I've protected menu bars on the top and bottom because attackers might modify those to facilitate phishing attacks. There's my chat on the right and an advertisement on the far right, and then there's a big multibox with all my friends' status updates in there. I might trust my friends, but you never know when someone might get their account compromised or hit with a virus or something, so we want to separate those out.

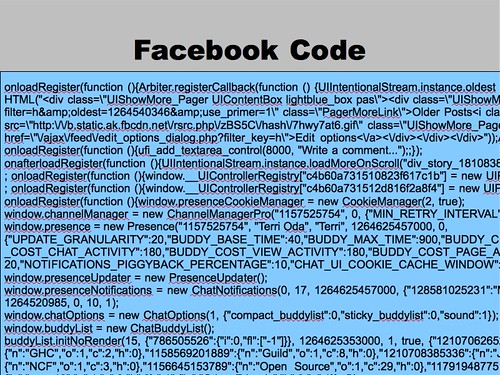

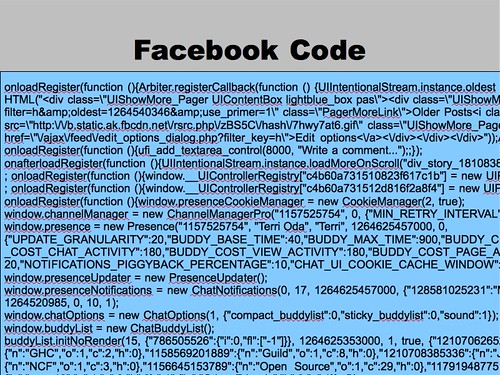

And here's what that fairly visually busy policy looks like in XML. Not too bad, really.

... Especially when you compare it to the actual code for facebook. This is some of the code used to generate the page I showed you (you can see my name in there). It's complex JavaScript, and it can be surprisingly difficult to figure out where a box should begin and end in all that mess. And that's not a critique of Facebook specifically: many web sites are generated from a variety of server and client-side systems. Writing policy for generated HTML can be very complex, and that could be one of the reasons so few web developers have embraced security policy.

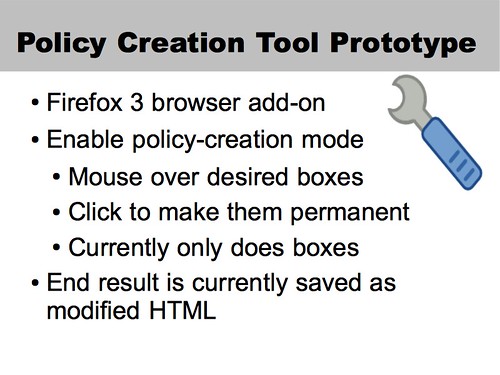

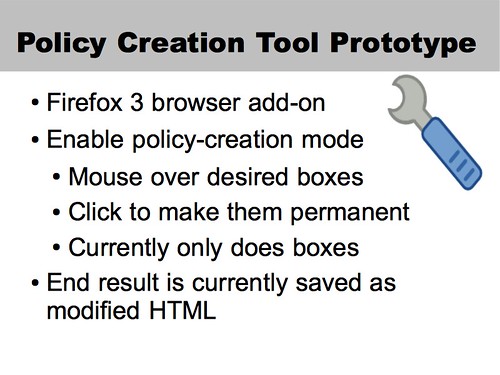

The real question at this point is “does it work?” and I can tell you that I do indeed have a working prototype. You put it into policy creation mode through the menu or a keystroke, mouse over the page, and click to draw the boxes. Right now, it only handles boxes: you have to write in channels and multiboxes by hand.

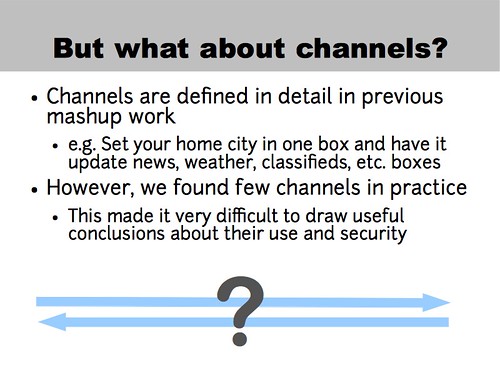

Now, you may be asking... what about the properties of channels? How do they work? And the answer is “I wish I could tell you.”

Channels are a staple of the existing work in mashups, with the idea that you'd want to set up a page so changing, say, your city could also update news, weather, etc. In other parts of the page. But within my test set, I was surprised to find very little use of this sort of inter-page communication. I don't know if this is an artifact of the pages we chose, or if there simply isn't much communication going in within the page. Perhaps most communication comes from attackers? I really don't know the answers.

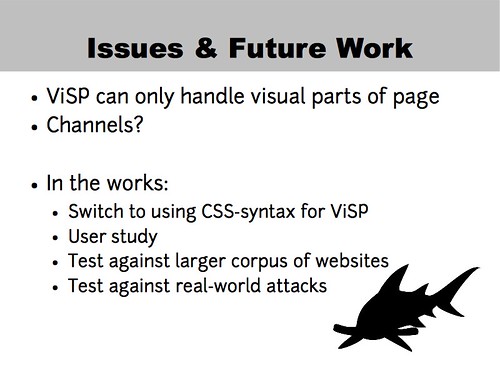

So here's some of the issues we found and some things I'd like to do. The big issue with ViSP is that it can only handle visual parts of the page, so if you've got JavaScript in your header, there's no way to encapsulate that. We found that in many cases, JavaScript was included where it was used, so you'd have menu code and the menu right together where the menu is displayed in the page instead of in the headers. But that may not always be the case.

It's unclear how that's going to work, just like it's unclear about how channels will work.

Several people, including one of my anonymous reviewers rightly suggested that ViSP might be even easier if it could be deployed not as separate XML but instead as a “security stylesheet” in CSS. So we're working on that. We're also putting together a user study for the fall so we can answer the question of whether it really is more usable. And of course, there are more tests to be had against other websites and real world attacks.

Since this is HotSec, here's a few questions to get the discussion started:

- Is ViSP really more usable? I've gotten really positive responses in my informal discussions with web folk, but it's still an open question.

- How much communication goes on within the page? Was that a fluke of our test set or have we learned something about normal web behaviours?

And finally

- What technologies should ViSP play well with to provide a complete solution?

This is only one piece of the web security puzzle that deals with one part of the web security problem – how does it need to interact with others to provide a complete solution?

Thanks for listening!

Want to know more? You can read the whole paper "Visual Security Policy for the Web" at the following locations:

You can also comment here or contact me at terri (at) zone12.com if you have any questions or ideas you'd like to discuss.